Table of Contents |

guest 2026-02-19 |

Jama installation

Warhol Installation

Warhol

Warhol is a proxmox node that runs on the GPU server. In essence Warhol is GPU.

Warhol is being setup through proxmox.

To reach Warhol, first enable a pass-through to olimp or home, and start a local browser instance (chromimum) proxied over the ssh connection:

ssh -D 8082 home

#different terminal

chromium --proxy-server="socks5://127.0.0.1:8082"

Navigate to https://172.16.10.215:8006/ in Chromium, use username and password provided by Črt.

Warhol is one of the nodes in cluster (the other being kobilica and will be used as storage).

Switching to non-enterprise version

In /etc/apt/sources.list.d move existing enterprise sources to disabled:

cd /etc/apt/sources.list.d

mv pve-enterprise.list pve-enterprise.list.disabled

mv ceph.list ceph.list.disabled

Add sources that point to community proxmox edition:

echo "deb http://download.proxmox.com/debian/pve bookworm pve-no-subscription" >> pve-no-subscription.list

echo "deb http://download.proxmox.com/debian/ceph-quincy bookworm no-subscription" >> ceph-no-subscription.list

apt-get update

Setting up NVIDIA GPU

vGPU (Abandonded)

Assuming more than a single VM instance will run GPU, a vGPU strategy was employed.

To that end, nuoveau was blacklisted:

echo "blacklist nouveau" >> /etc/modprobe.d/nouveau-blacklist.conf

update-initramfs -u -k all

shutdown -r now

#after reboot, took a while

lsmod | grep no

#no output

Install DKMS:

apt update

apt install dkms libc6-dev proxmox-default-headers --no-install-recommends

It seems vGPU costs money. Alternative is a pass-through setup, where GPU is assigned to a single VM.

Pass-through

Following pass-through manual, also nvidia* was added to blacklisted modules.

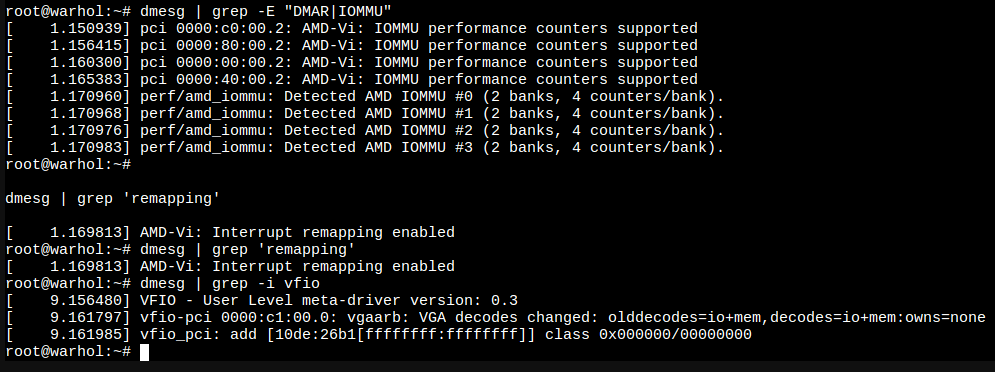

Following official PCIe passthrough, or a forum tutorial, one should add vfio* drivers:

echo "vfio" >> /etc/modules

echo "vfio_iommu_type1" >> /etc/modules

echo "vfio_pci" >> /etc/modules

update-initramfs -u -k all

After reboot, new modules should be loaded, check with:

lsmod | grep vfio

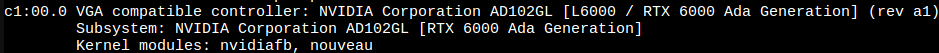

Now, vfio is running, but NVIDIA is not associated with it, checked with:

lspci -k

First, find the PCI ID of the card:

>lspci -nn

c1:00.0 VGA compatible controller [0300]: NVIDIA Corporation AD102GL [L6000 / RTX 6000 Ada Generation] [10de:26b1] (rev a1)

ID is the number in brackets, [10de:26b1].

Then, the tutorial suggests to help modprobe determine which driver to use by:

echo "options vfio-pci ids=10de:26b1" >> /etc/modprobe.d/vfio.conf

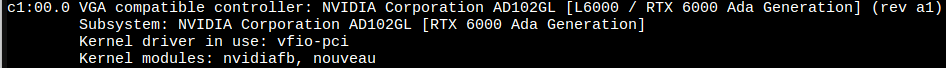

After reboot:

lspci -k

Final checks:

The PCI has then to be added to the VM in Hardware section of the PROXMOX VME setup by clicking Add -> PCI Device -> Raw device -> navigate to GPU, I also marked All Functions check box. After that, VM was showing the GPU with lspci command.

Jama installation

Setup of jama

Jama is a known slovenian painter, and its namesake workstation will be used as GPU and analysis PC.

GPU

After Warhol setup, lspci shows the pass-through GPU:

>lspci

00:10.0 VGA compatible controller: NVIDIA Corporation AD102GL [L6000 / RTX 6000 Ada Generation] (rev a1)

00:10.1 Audio device: NVIDIA Corporation AD102 High Definition Audio Controller (rev a1)

interestingly enough, audio controller also came through on the ride. More specific,

>lspci -k

00:10.0 VGA compatible controller: NVIDIA Corporation AD102GL [L6000 / RTX 6000 Ada Generation] (rev a1)

Subsystem: NVIDIA Corporation AD102GL [RTX 6000 Ada Generation]

Kernel modules: nouveau

shows that no driver was loaded, but nouveau was suggested.

NVIDIA Driver (aka CUDA)

For NVIDIA drivers, I am following NVIDIA installation instructions.

Going through standard checks, gcc is required:

sudo apt-get install gcc

Get the cuda kit (web installer)

wget https://developer.download.nvidia.com/compute/cuda/repos/debian12/x86_64/cuda-keyring_1.1-1_all.deb

sudo dpkg -i cuda-keyring_1.1-1_all.deb

sudo apt-get update

sudo apt-get -y install cuda-toolkit-12-8

sudo apt-get -V install nvidia-open

#replace just with

#sudo apt -V install nvidia-driver-cuda nvidia-kernel-open-dkms

#for compute only system

And do a reboot. Driver matches the device:

>lspci -k

00:10.0 VGA compatible controller: NVIDIA Corporation AD102GL [L6000 / RTX 6000 Ada Generation] (rev a1)

Subsystem: NVIDIA Corporation AD102GL [RTX 6000 Ada Generation]

Kernel driver in use: nvidia

Kernel modules: nouveau, nvidia_drm, nvidia

Post installation steps

Skipped persistenced, doesn't seem relevant.

Driver version

>cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX Open Kernel Module for x86_64 570.86.15 Release Build (dvs-builder@U16-I2-C03-12-4) Thu Jan 23 22:50:36 UTC 2025

GCC version: gcc version 12.2.0 (Debian 12.2.0-14)

Local repository removal

> sudo apt remove --purge nvidia-driver-local-repo-$distro*

E: Unable to locate package nvidia-driver-local-repo

CUDA Samples

Had to add PATH and LD_LIBRARY_PATH to .bashrc to have cmake find CUDA:

[...]

## CUDA

export PATH=/usr/local/cuda-12.8/bin${PATH:+:${PATH}}

export LD_LIBRARY_PATH=/usr/local/cuda-12.8/lib64${LD_LIBRARY_PATH:+:${LD_LIBRARY_PATH}}

Then I deleted the build portion of cuda-samples and started fresh:

cd software/src

git clone https://github.com/NVIDIA/cuda-samples.git

cd ..

mkdir build/cuda-samples

cd build/cuda-samples

cmake ../../src/cuda-samples

make -j8

Some suggested tests:

andrej@jama:~/software/build/cuda-samples/Samples/1_Utilities/deviceQuery$ ./deviceQuery

./deviceQuery Starting...

CUDA Device Query (Runtime API) version (CUDART static linking)

Detected 1 CUDA Capable device(s)

Device 0: "NVIDIA RTX 6000 Ada Generation"

CUDA Driver Version / Runtime Version 12.8 / 12.8

CUDA Capability Major/Minor version number: 8.9

Total amount of global memory: 48520 MBytes (50876841984 bytes)

(142) Multiprocessors, (128) CUDA Cores/MP: 18176 CUDA Cores

GPU Max Clock rate: 2505 MHz (2.50 GHz)

Memory Clock rate: 10001 Mhz

Memory Bus Width: 384-bit

L2 Cache Size: 100663296 bytes

Maximum Texture Dimension Size (x,y,z) 1D=(131072), 2D=(131072, 65536), 3D=(16384, 16384, 16384)

Maximum Layered 1D Texture Size, (num) layers 1D=(32768), 2048 layers

Maximum Layered 2D Texture Size, (num) layers 2D=(32768, 32768), 2048 layers

Total amount of constant memory: 65536 bytes

Total amount of shared memory per block: 49152 bytes

Total shared memory per multiprocessor: 102400 bytes

Total number of registers available per block: 65536

Warp size: 32

Maximum number of threads per multiprocessor: 1536

Maximum number of threads per block: 1024

Max dimension size of a thread block (x,y,z): (1024, 1024, 64)

Max dimension size of a grid size (x,y,z): (2147483647, 65535, 65535)

Maximum memory pitch: 2147483647 bytes

Texture alignment: 512 bytes

Concurrent copy and kernel execution: Yes with 2 copy engine(s)

Run time limit on kernels: No

Integrated GPU sharing Host Memory: No

Support host page-locked memory mapping: Yes

Alignment requirement for Surfaces: Yes

Device has ECC support: Disabled

Device supports Unified Addressing (UVA): Yes

Device supports Managed Memory: Yes

Device supports Compute Preemption: Yes

Supports Cooperative Kernel Launch: Yes

Supports MultiDevice Co-op Kernel Launch: Yes

Device PCI Domain ID / Bus ID / location ID: 0 / 0 / 16

Compute Mode:

< Default (multiple host threads can use ::cudaSetDevice() with device simultaneously) >

deviceQuery, CUDA Driver = CUDART, CUDA Driver Version = 12.8, CUDA Runtime Version = 12.8, NumDevs = 1

Result = PASS

andrej@jama:~/software/build/cuda-samples$ ./Samples/1_Utilities/bandwidthTest/bandwidthTest

[CUDA Bandwidth Test] - Starting...

Running on...

Device 0: NVIDIA RTX 6000 Ada Generation

Quick Mode

Host to Device Bandwidth, 1 Device(s)

PINNED Memory Transfers

Transfer Size (Bytes) Bandwidth(GB/s)

32000000 26.7

Device to Host Bandwidth, 1 Device(s)

PINNED Memory Transfers

Transfer Size (Bytes) Bandwidth(GB/s)

32000000 26.3

Device to Device Bandwidth, 1 Device(s)

PINNED Memory Transfers

Transfer Size (Bytes) Bandwidth(GB/s)

32000000 4443.4

Result = PASS

NOTE: The CUDA Samples are not meant for performance measurements. Results may vary when GPU Boost is enabled.

Disk resizing

I attempted a disk resize. First I followed the instructions on proxmox.

-

Stop the VM

-

I navigated to jama and added 3000 to disk size. Immediately an error popped up:

TASK ERROR: unable to open file '/etc/pve/nodes/warhol/qemu-server/100.conf.tmp.1998375' - Permission deniedI was quite concerned. Funny, disk size changed in dpool(warhol) disk cluster, but failed to show in jama hardware layout.

LATER: It turns out/etc/pve/nodes/warhol/qemu-server/100.confis just a text file, and it doesn't really reflect the

disk layout. So hardware under jama could be wrong. -

Trying to start jama, new error popped up:

proxmox cluster not ready no quorum (500)It seems that manipulating expected number of VMs helps, following suggestion actually helped. While doing that, I had great troubles getting a terminal on warhol (parent PC). The only way was to connect to olimp and use local IP directly from olimp, ie.

ssh root@172.16.10.215 warhol $ pvecm expect 1 -

Update the partition on

jama. Follow notes on disk management, including replacing the partition with agptpartition usingfdisk. I didn't have to used parted, and forext4the correct command isresize2fs, as link suggests.$ ssh jama $ sudo umount /data $ sudo fdisk /dev/sdb $ sudo e2fsck -f /dev/sdb1 $ e2fsck 1.47.0 (5-Feb-2023) Pass 1: Checking inodes, blocks, and sizes Pass 2: Checking directory structure Pass 3: Checking directory connectivity Pass 4: Checking reference counts Pass 5: Checking group summary information home: 191683/67108864 files (1.1% non-contiguous), 150399120/268434944 blocks $ sudo resize2fs /dev/sdb1 resize2fs 1.47.0 (5-Feb-2023) Resizing the filesystem on /dev/sdb1 to 1055129088 (4k) blocks. The filesystem on /dev/sdb1 is now 1055129088 (4k) blocks long. $ df -h Filesystem Size Used Avail Use% Mounted on udev 150G 0 150G 0% /dev tmpfs 30G 724K 30G 1% /run /dev/sda1 126G 90G 31G 75% / tmpfs 150G 0 150G 0% /dev/shm tmpfs 5.0M 0 5.0M 0% /run/lock tmpfs 30G 0 30G 0% /run/user/1000 /dev/sdb1 3.9T 557G 3.2T 15% /data